Imagine building powerful microservices with streaming SQL – it’s a game-changer thanks to its built-in functions, custom user-defined functions (UDFs), handy materialized results, and seamless connections with cutting-edge ML and AI models.

“If the only tool you have is a hammer, you tend to see every problem as a nail.” — Abraham Maslow, 1966

Microservices are awesome for building business services! They give you so much flexibility: pick your own tools, technologies, languages, and even release them on your own schedule. You’re in full control of your dependencies. But here’s the thing: microservices aren’t always the perfect fit for *every* problem. Just like Maslow said, if we limit our options, we might end up trying to force a less-than-ideal solution onto our challenges. In this article, we’re going to explore streaming SQL. Why? So you can add another fantastic tool to your toolkit for tackling business problems. But first, let’s clear something up: how is it different from a regular, everyday (batch) SQL query?

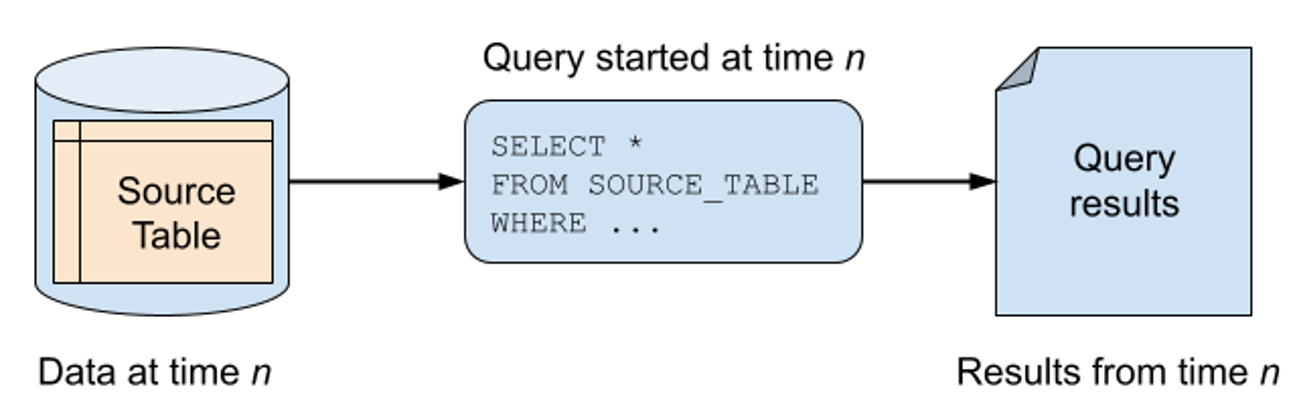

Think about SQL queries on a traditional database, like Postgres. They’re what we call “bounded” queries. They work with a fixed set of data—whatever was in the database *at the exact moment* you ran the query. If any changes happen to that data after your query finishes, those new bits won’t show up in your results. To see the new stuff, you’d simply have to run the query again.

Here’s what a “bounded” SQL query looks like on a standard database table.

Confluent

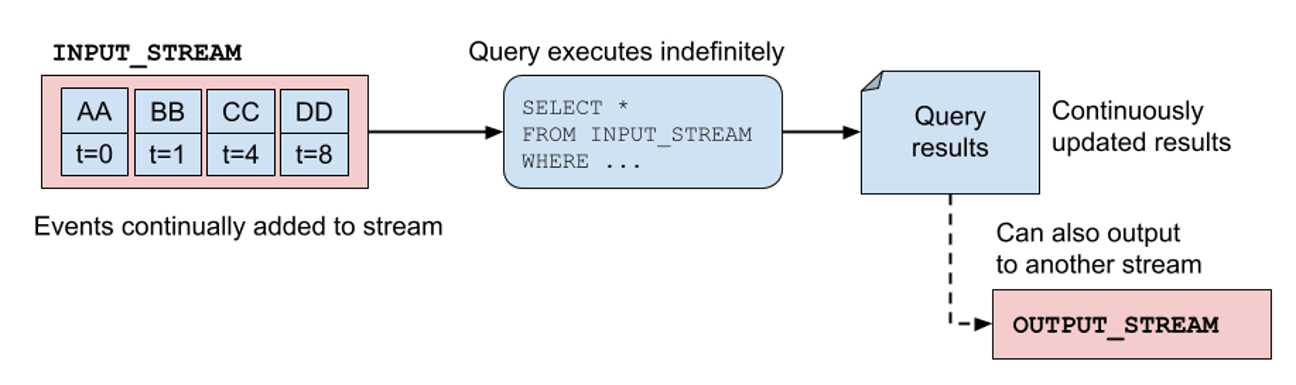

Now, a streaming SQL query is a whole different beast! It works with an *unbounded* data set – usually one or more streams of events that just keep coming. In this setup, the streaming SQL engine continuously takes in events from the stream(s) one by one, carefully ordering them by their timestamps and offsets. The coolest part? This streaming SQL query runs *forever*, processing events as soon as they arrive. It updates its internal state, crunches out results, and can even send new events to other streams further down the line.

And here’s the magic of an “unbounded” SQL query, continuously working on an event stream.

Confluent

Apache Flink is a brilliant example of a streaming SQL solution in action. Beneath the surface, it’s a multi-layered streaming framework that starts with low-level building blocks, moves up to a DataStream API, then a more abstract Table API, and finally, at the very top, offers a streaming SQL API. Just a heads-up: Flink’s SQL streaming syntax might look a little different from other streaming SQLs out there, since there isn’t one universal standard for streaming SQL syntax. While some services stick to ANSI standard SQL, others (like Flink) have their own cool variations to fit their streaming frameworks.

Building services with streaming SQL comes with some seriously good perks! For starters, you get to tap into these incredibly powerful streaming frameworks without needing to get bogged down in all the deep, underlying syntax. Secondly, you can offload all the tricky bits of streaming—like repartitioning data, rebalancing workloads, and recovering from failures—directly to the framework. Talk about a relief! And third, you gain the freedom to write your streaming logic in plain SQL instead of having to learn a framework’s specialized domain-specific language. Many of the really robust streaming frameworks (like Apache Kafka Streams and Flink) are built on the Java Virtual Machine (JVM), and they often have limited support for other programming languages.

One last thing worth noting: Flink uses the TABLE type as a core data element. You can really see this if you check out this four-layer Flink API diagram, where the SQL layer sits right on top of the Table API. Flink cleverly uses both streams and tables as its fundamental data types. This means you can easily transform a stream into a table, and just as simply, turn a table back into a stream by adding its updates to an output stream. For all the juicy details, hop over to the official documentation!

Alright, let’s switch gears and explore a few common ways streaming SQL is used. You’ll quickly see where it truly shines!

Pattern 1: Seamlessly integrating with AI and machine learning

First up, streaming SQL makes it incredibly easy to connect directly with artificial intelligence and machine learning (ML) models, right from your SQL code! Tapping into an AI or ML model through streaming SQL is simpler than ever before. With AI becoming a major player for so many business tasks, streaming SQL offers you a fantastic way to use these models in an event-driven fashion, without the hassle of setting up, running, and managing a separate microservice. This pattern works a lot like the user-defined function (UDF) approach: you build your model, register it so the system knows about it, and then just call it directly within your SQL query.

The Flink documentation really dives deep into how you’d connect a model:

CREATE MODEL sentiment_analysis_model

INPUT (text STRING COMMENT 'Input text for sentiment analysis')

OUTPUT (sentiment STRING COMMENT 'Predicted sentiment (positive/negative/neutral/mixed)')

COMMENT 'A model for sentiment analysis of text'

WITH (

'provider' = 'openai',

'endpoint' = 'https://api.openai.com/v1/chat/completions',

'api-key' = '',

'model'='gpt-3.5-turbo',

'system-prompt' = 'Classify the text below into one of the following labels: [positive, negative, neutral, mixed]. Output only the label.'

);

Source: Flink Examples

This model declaration is essentially a setup, a bunch of “wiring and configs” that lets you use your model right within your streaming SQL code. For instance, you could use this declaration to figure out the sentiment of text found in an event. Just remember that ML_PREDICT needs both the specific model name you’re using and the text parameter:

INSERT INTO my_sentiment_results

SELECT text, sentiment

FROM input_event_stream, LATERAL TABLE(ML_PREDICT('sentiment_analysis_model', text));

Pattern 2: Crafting custom business logic as functions

While streaming SQL comes packed with many native functions, it’s just not practical to include *everything* directly into the language syntax. That’s precisely where user-defined functions (UDFs) step in! These are functions that *you* define, and your program can then execute them right from your SQL statements. UDFs are super versatile; they can even call external systems and create side effects that aren’t natively supported by standard SQL. You’ll define your UDF by writing the function code in a separate file, then upload it to your streaming SQL service before you run your SQL statement.

Let’s take a peek at a Flink example.

// Declare the UDF in a separate java file

import org.apache.flink.table.api.*;

import org.apache.flink.table.functions.ScalarFunction;

import static org.apache.flink.table.api.Expressions.*;//Returns 2 for high risk, 1 for normal risk, 0 for low risk. public static class DefaultRiskUDF extends ScalarFunction { public Integer eval(Integer debt, Integer interest_basis_points, Integer annual_repayment, Integer timespan_in_years) throws Exception {

int computed_debt = debt;

for (int i = 0; i < timespan_in_years; i++) {

computed_debt = computed_debt + (computed_debt * interest_basis_points / 10000)

- annual_repayment; } if ( computed_debt >= debt ) return 2; else if ( computed_debt < debt && computed_debt > debt / 2) return 1; else return 0; }

Next up, you’ll compile that Java file into a handy JAR file and upload it to a spot your streaming SQL framework can easily reach. Once that JAR is loaded, you’ll need to officially register it with the framework. After that, guess what? You can start using it directly in your streaming SQL statements! Here’s a quick look at how you’d register and then call it.

-- Register the function.

CREATE FUNCTION DefaultRiskUDF

AS 'com.namespace.SubstringUDF'

USING JAR '';— Invoke the function to compute the risk of: — 100k debt over 15 years, 4% interest rate (400 basis points), and a 10k annual repayment rate SELECT UserId, DefaultRiskUDF(100000, 400, 10000, 15) AS RiskRating FROM UserFinances WHERE RiskRating >= 1;

This UDF helps us figure out the risk of a borrower defaulting on a loan, giving us 0 for low risk, 1 for normal risk, and a 2 for high-risk borrowers or accounts that are in trouble. While this UDF is a pretty straightforward and made-up example, you can actually do *much* more complex operations using almost anything from the standard Java libraries! You won’t have to build an entire microservice just to get some functionality that’s missing from your streaming SQL solution. Instead, you can just upload what you need and call it directly from your code.

Pattern 3: Simple filters, aggregations, and joins

This pattern takes us back to the core, yet incredibly powerful, functions built right into the streaming SQL framework. A common and popular use for streaming SQL is basic filtering: you keep all the records that match certain criteria and simply discard the rest. Take a look at this SQL filter—it only returns records where total_price is greater than 10.00. You can then send these filtered results to a table or an event stream.

SELECT *

FROM orders

WHERE total_price > 10.00;

Windowing and aggregations are another fantastic set of features for streaming SQL. In this security example, we’re counting how many times a specific user tried to log in within a one-minute tumbling window. (By the way, you can also use other cool window types like sliding or session!)

SELECT

COUNT(user_id) AS login_count,

TUMBLE_START(event_time, INTERVAL '1' MINUTE) AS window_start

FROM login_attempts

GROUP BY TUMBLE(event_time, INTERVAL '1' MINUTE);

Once you’ve got the number of login attempts for a user within that window, you can easily filter for a higher value (say, anything above 10). If it hits that threshold, you can trigger some business logic inside a UDF – perhaps temporarily locking them out as a clever anti-hacking measure.

And finally, you can also combine data from multiple streams with just a few straightforward commands! Joining streams (whether as streams or as tables) is actually pretty tricky to do well without a dedicated streaming framework, especially when you factor in things like fault tolerance, scalability, and performance. In our example here, we’re bringing together Product data and Orders data by matching their product IDs, giving us a richer, combined Order + Product result.

SELECT * FROM Orders

INNER JOIN Product

ON Orders.productId = Product.id

Just a quick heads-up: not all streaming frameworks (SQL or otherwise) can handle primary-to-foreign-key joins. Some only let you do primary-to-primary-key joins. Why? The simple answer is that implementing these types of joins while also ensuring fault tolerance, scalability, and top-notch performance can be quite a challenge. So, it’s definitely a good idea to dig into how your specific streaming SQL framework handles joins and see if it supports both foreign and primary key joins, or just the latter.

So far, we’ve explored some of the fundamental functions of streaming SQL. However, right now, the results from these queries aren’t really driving anything super complex. As they stand, you’d basically just be sending them into another event stream for some downstream service to pick up. That brings us neatly to our next pattern: the sidecar.

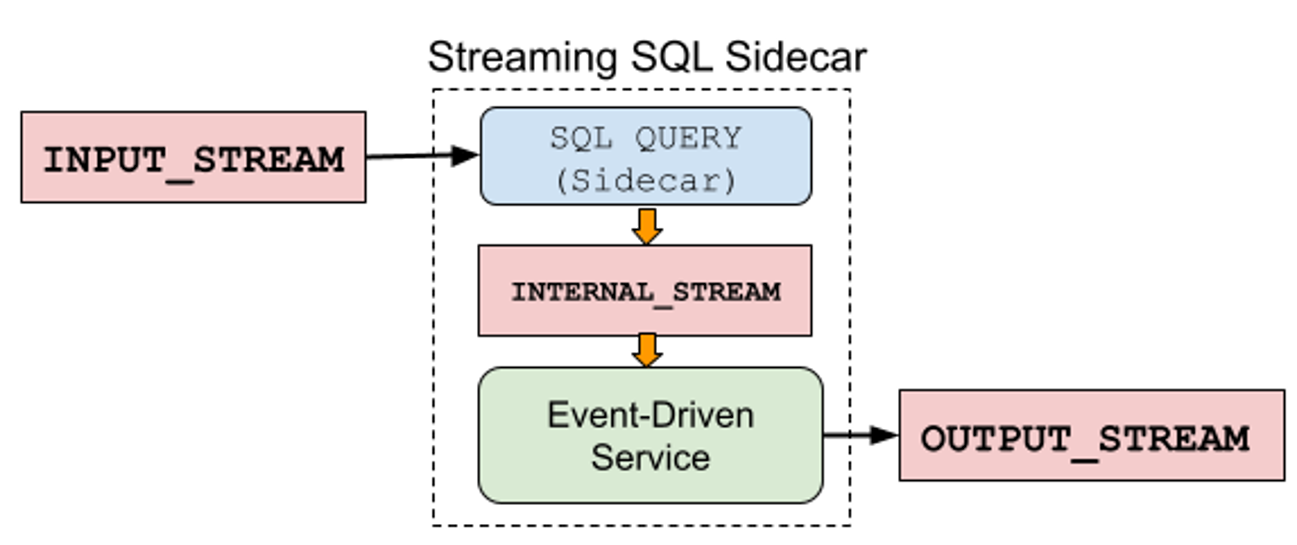

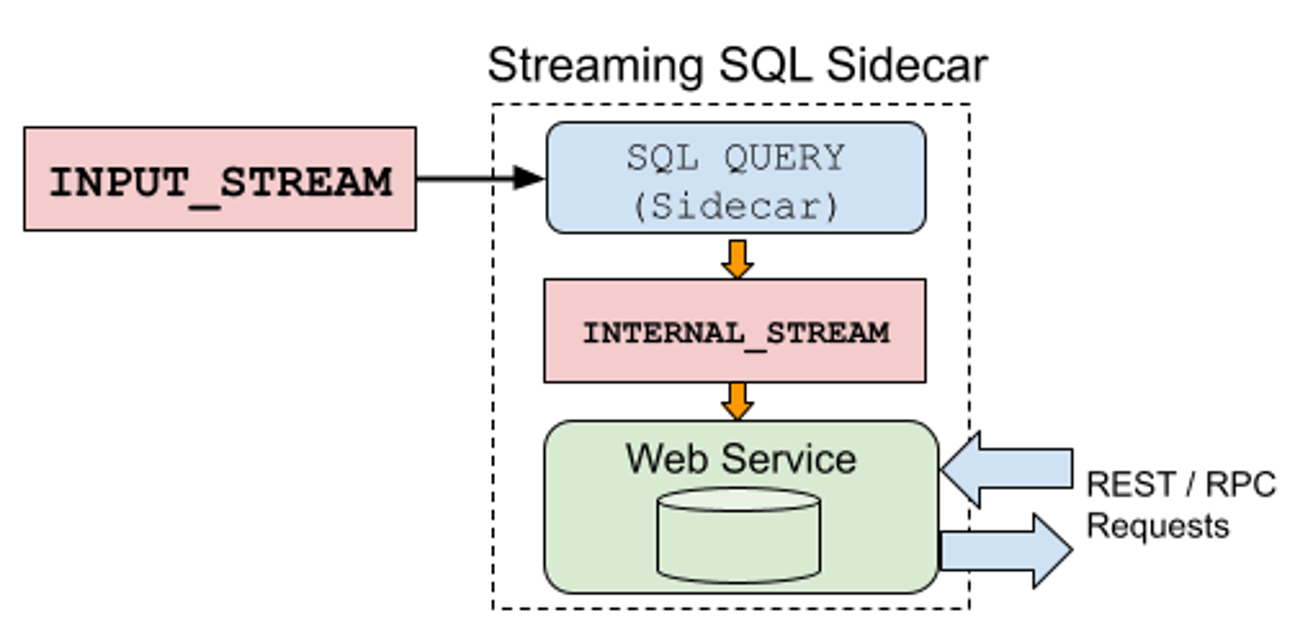

Pattern 4: The streaming SQL sidecar

The streaming SQL sidecar pattern is pretty neat! It lets you harness all the power of a full-featured stream processing engine, like Flink or Kafka Streams, without needing to write your core business logic in the same language. Basically, the streaming SQL component handles all the rich stream processing goodness—think aggregations and joins—while your downstream application gets to process the resulting event stream in its *own* independent runtime. How cool is that?

Here’s how you connect a streaming SQL query to an event-driven service using an internal stream.

Confluent

In this example, INTERNAL_STREAM is essentially a Kafka topic where the SQL sidecar writes all its computed results. Then, your event-driven service consumes those events from the INTERNAL_STREAM, processes them as needed, and might even send out new events to the OUTPUT_STREAM.

Another popular way to use the sidecar pattern is for preparing data to be served by a web service to other applications. How does it work? The consumer picks up data from the INPUT_STREAM, processes it, and then makes it available for the web service to store in its own state. From there, it can easily handle request/response queries from other services, like REST and RPC requests.

See how the sidecar pattern can power a web service to handle REST / RPC requests!

Confluent

While the sidecar pattern opens up a ton of possibilities for extra capabilities, you *do* need to build, manage, and deploy that sidecar service right alongside your SQL queries. But here’s a huge plus for this pattern: you can really lean on streaming SQL to take care of all those complex streaming transformations and logic without having to completely overhaul your entire tech stack. Instead, you simply plug the streaming SQL results into your existing setup, letting you build your web services and other applications using the same tools you’ve always loved.

Even more cool use cases!

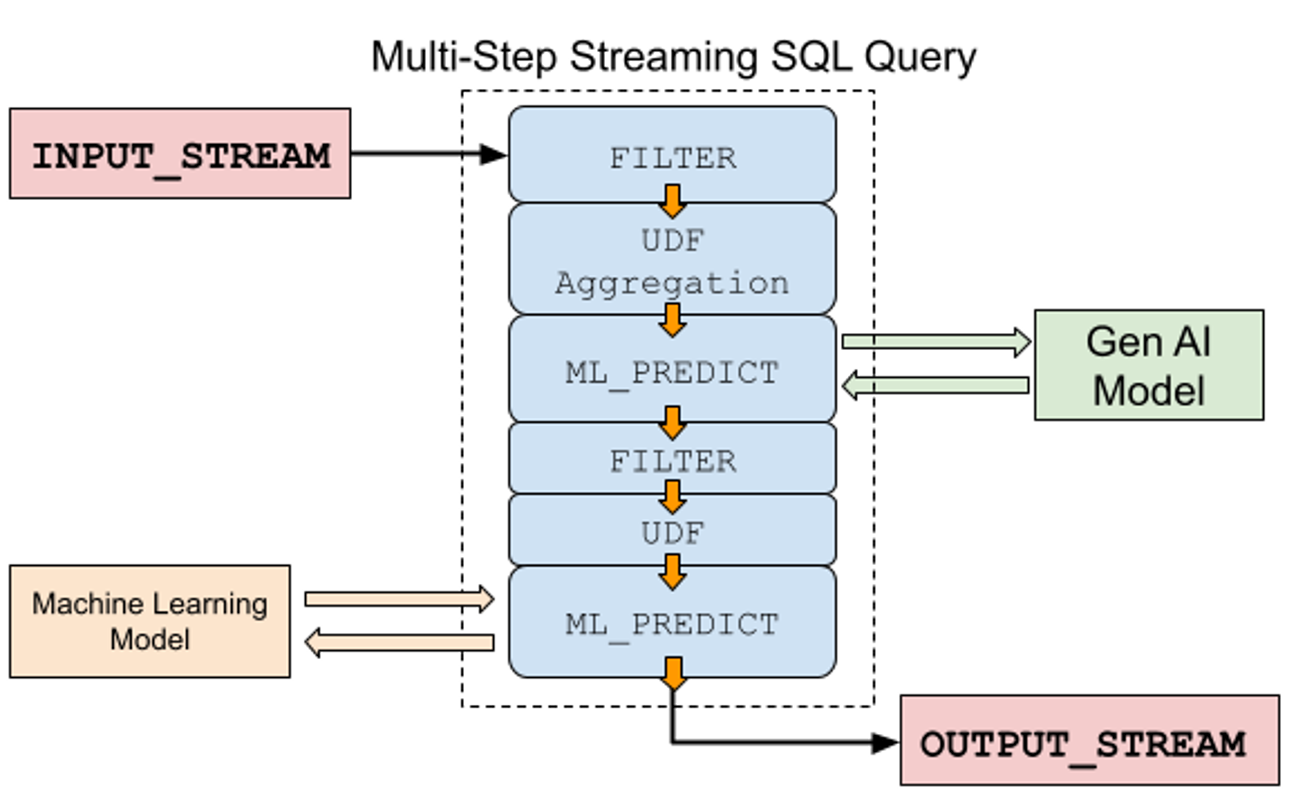

Each of these patterns shows operations by themselves, which might be enough for simpler applications. But for more complicated business needs, chances are you’ll be chaining multiple patterns together, with the results from one feeding directly into the next. For example, you might first filter some data, then apply a UDF, and *then* make a call to an ML or AI model using ML_PREDICT, just like you see in the first part of the example below. The streaming SQL then filters those results from the first ML_PREDICT call, applies another UDF, and finally sends those results to a last ML model before writing everything to the OUTPUT_STREAM.

You can chain multiple SQL streaming operations together to handle even the most complex scenarios!

Confluent

Streaming SQL is often provided as a serverless option by many cloud providers, making it a super fast and easy way to get your streaming data services up and running. With its awesome built-in functions, flexible UDFs, materialized results, and powerful integrations with ML and AI models, streaming SQL has definitely earned its place as a strong contender when you’re thinking about building microservices.

—

New Tech Forum is your go-to spot where tech leaders—including our awesome vendors and other expert contributors—can really dive deep into and chat about the latest emerging enterprise technologies. We handpick topics we think are crucial and most interesting to our InfoWorld readers. Just so you know, InfoWorld never publishes marketing materials and always keeps the right to edit all contributed content. Got questions? Send all inquiries to doug_dineley@foundryco.com.